Ramen (ラーメン)

I hear the term “Ramen” already before and about the hype on Ramen shops all over the world but never payed attention to it. Now it happened a couple of weeks ago that, when dining out with my girl friend at Karl’s kitchen in Breuninger Stuttgart, they offered Ramen soup. Always open to sumptuous experiments, I tried … and was positively surprised. It tasted delicious.

Now what followed is, that’s my style, a thorough research about Ramen. Where does it come from and how to prepare it yourself. I quickly found out some basic receipts for the Japanese fast-food and set out to go practical.

First of all, there are 3 steps to get a ramen soup:

- Base broth

- Spice broth

- Soup with toppings

When you watch receipts in youtube, you see that often for sake of efficiency the distinction between base and spice broth is ignored and one broth according to the local habits is prepared only. Here we want to stick to the original as close as we can.

Base Broth

First is to note, that ramen is used in all of Japan and this is a country that stretches from the sub-tropical south of Okinawa up to frozen north of Hokkaido. So it is natural that depending on region the basic style is different and adapted to what’s available there. This is why one distinguishes different ramen types:

- Shōyu ramen (醤油, “soy sauce”) with soy sauce

- Shio ramen (塩,”salt”) based on fish and seafood

- Miso ramen (味噌) based on fermented soy beans (miso paste)

- Karē ramen (カレー,”curry”) with curry

- onkotsu ramen (豚骨ラーメン) based on pork meat and bones

You can find quite original receipts on Lecker (onkotsu ramen) and Chefkoch. For the first time, I did indeed follow the basis receipt with pork meat and bones, as that’s what I could get. Getting pork bones is rare actually as they do not keep up very well and you don’t use it for cooking sauce or broth normally but rather beef bones. So lucky coincidence.

So I used:

- Pork bones with meat

- Mixed vegetables for soup (mirepoix)

- Garlic

- Chicken wings

- Kombu alga – hard to get because it is obviously expensive

For detailed preparation see the receipts, I had to exchange Kombu alga for Wakame alga. You cook for 2 hours. The broth can be frozen, taken care to not fill the bottles or other containers very full in order to avoid that they burst.

Spice Broth

The spice broth is made, short term, from

- Soy sauce

- Flares of bonito (dried thin sliced tuna) – one can buy in the local Asia shop

- The meat from the base broth

The spice broth does not have to be cooked long up-front but, when needed as it does not take too much time. The spice broth is added to the base broth to create the soup’s broth.

Soup and Toppings

Now you can start creating the soup itself. With ramen it’s like Pizza, you can add what you like if not following some traditional receipts. Here some ideas:

- Sautéd Mushrooms are always good: Shiitake, Enoki or other asian mushrooms are must have.

- Spring onions, I like them sautéd as well

- Pak Choi, again sautéd shortly

- Sprouts, sautéd

- Meat, fish from the broth or shrimp

- Roasted vegetables like corn, thin sliced carrots

- Cooked eggs

- Pumpkin

- Sesame paste

and of course noodles, either ramen noodles or other asian noodles like soba or udon. The ramen noodles are made of wheat, soba from buckwheat. Arrange everything neatly, with the half eggs on the top and ready is the ramen soup. It’s not really fast to prepare with all the stuff to roast and the hour-long broth cooking but well prepared you can re-use the frozen broth and then it’s not too much effort.

Delicious and good for a whole meal, enjoy!

And next time another variant …

Peter

Letztes Jahr hat Barbara doch tatsächlich ein eigenes Buch geschrieben, “

Letztes Jahr hat Barbara doch tatsächlich ein eigenes Buch geschrieben, “ As I’m currently involved with lots of openssl automation at work, I bought the book “Bulletproof SSL and TLS” from Ivan Ristić. See the book’s site at

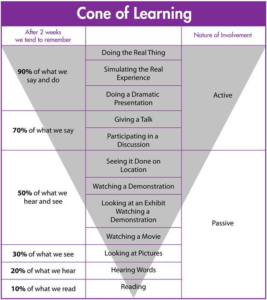

As I’m currently involved with lots of openssl automation at work, I bought the book “Bulletproof SSL and TLS” from Ivan Ristić. See the book’s site at  There is the model of “cone of learning” from Edgar Dale, I think. It explains how good media are for learning. The book is doing pretty bad in this model. It is passive learning and you remember only small parts of what you read. In contrast a video or podcast is remembered much more. And that is probably right in the general. How long do you remember what you read a year ago in a book? Nevertheless the depth is a different in a book in contrast to other media. and I would say it needs to stay in the learning mix also these days, electronic or not.

There is the model of “cone of learning” from Edgar Dale, I think. It explains how good media are for learning. The book is doing pretty bad in this model. It is passive learning and you remember only small parts of what you read. In contrast a video or podcast is remembered much more. And that is probably right in the general. How long do you remember what you read a year ago in a book? Nevertheless the depth is a different in a book in contrast to other media. and I would say it needs to stay in the learning mix also these days, electronic or not.