The Problem with AI and ML today

I have to admit, that I’m not an expert in AI or machine learning (ML) but I think that I understand it on a certain high level good enough. In the end I did some work in BigData, Hadoop and have been reading on AI and ML quite a bit already. And since the start I had this uncertain feeling, that the current state of AI even with deep learning is not really intelligent. Yes it seems to work to a certain level, you see this with the current progress with automated driving or also with use cases in IIoT like visual inspection or material checks that are based on AI models and deep learning.

But what always struck me, is that the system that does all great functionality is really dump and it has no idea what it has learned. Nobody can look at the “mental” model of the AI model and explain why it can detect an object or recognise a pattern. It just works based on pure data. That is exactly this, AI today works on detecting something interesting just based on the input data it has been trained with.

So a couple of weeks ago I bought a book as I stumbled over it on Amazon. This week I started reading “The book of Why” from Judea Pearl and Dana Mackenzie. The book is about the theory of causal relations and the need for causation in artificial intelligence.

So a couple of weeks ago I bought a book as I stumbled over it on Amazon. This week I started reading “The book of Why” from Judea Pearl and Dana Mackenzie. The book is about the theory of causal relations and the need for causation in artificial intelligence.

Already the first chapter struck me like a lightening bolt. Judea explained exactly what I always felt, that the current AI is level 1 of the ladder of causation. Level 1 means that learning is based on associations that are found in the data by the algorithm. The mechanism for this is in the end statistics, probability, that’s all.

Associations are detected in the data because the AI model has been trained with some similar pattern and when it sees it again it can detect it. But the pattern needs to be at least similar to something learned, that is why it is so important to have good and tons of training data. If there is a completely new pattern in the data that the algorithm hasn’t learned yet, it cannot detect it. That is why the intelligence of such an algorithm is on the level of an animal but any small child with 3 years is more intelligent.

And worst, the model doesn’t really know what it has learned, the representation is just factors in e.g. a neural network. There is really no knowledge representation as such.

Now I do have since quite some time one topic that is always in my focus and that is semantic web technology and the way how knowledge can be represented in a knowledge graph and how to work with that in real-world technology. Before in the space of IT management, now in the area of IIoT.

Now and here is the point that struck me like a hit with a lamp post. On the one hand there is the classical AI technology with the ability to automatically learn and detect patterns. On the other hand there is semantic technology with its semantic data models and query mechanisms on a formal machine readable knowledge representations.

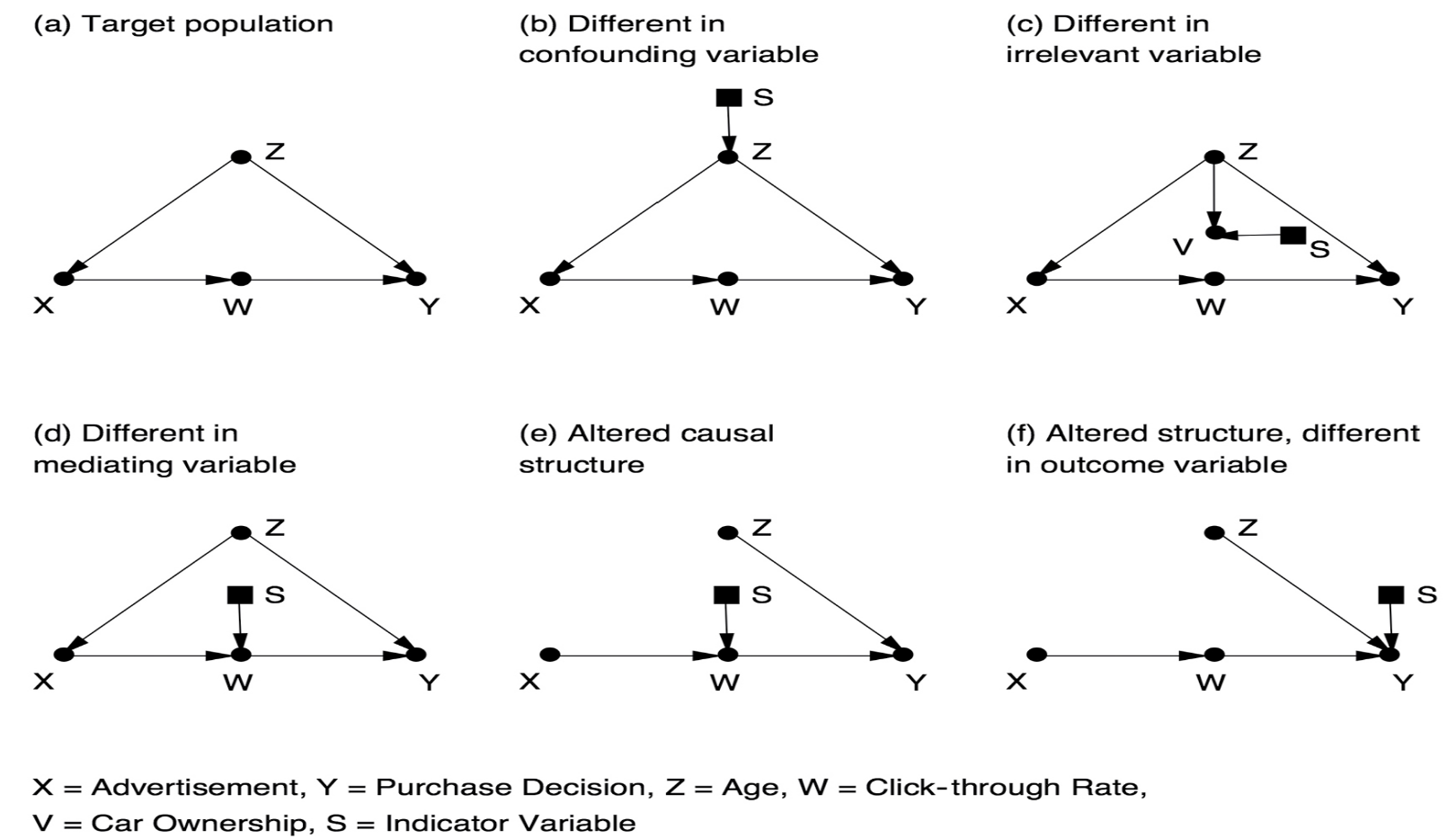

And the difference to the next level 2 in Judea’s ladder of causation is exactly that one has a causal model not just data. The causal model is represented as a directed graph of causal relations with numerical factors on the edges.

Now that sounds very familiar to me, that is easy to represent as a semantic graph in RDF or OWL! Causal relations represented as relations in a semantic model as one of the most important relations.

Technically there are of course a couple of questions practically how an AI ML model can work with a semantic graph model. Probably one needs to transform the knowledge graph into a ANN first. It would be interesting to speak with an AI expert on this.

I would even go so far as to it would be a benefit to represent learned associations in such a model as well. Knowledge is in the end different types, there is fact knowledge, rules, causal relations, associations and other relations, that are not causal. If we represent all these in a semantic model, we come closer to how we see the human brain. Because as human beings we do record these relations as well and we are aware of them, we can search and access them, just like a knowledge graph!

Maybe this is in the end the way how we can bring computers to at least level two of the ladder of causation and doing this also for our applications in IIoT.

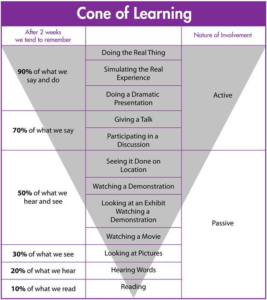

There is the model of “cone of learning” from Edgar Dale, I think. It explains how good media are for learning. The book is doing pretty bad in this model. It is passive learning and you remember only small parts of what you read. In contrast a video or podcast is remembered much more. And that is probably right in the general. How long do you remember what you read a year ago in a book? Nevertheless the depth is a different in a book in contrast to other media. and I would say it needs to stay in the learning mix also these days, electronic or not.

There is the model of “cone of learning” from Edgar Dale, I think. It explains how good media are for learning. The book is doing pretty bad in this model. It is passive learning and you remember only small parts of what you read. In contrast a video or podcast is remembered much more. And that is probably right in the general. How long do you remember what you read a year ago in a book? Nevertheless the depth is a different in a book in contrast to other media. and I would say it needs to stay in the learning mix also these days, electronic or not.